HUNTSVILLE – One of the most agonizing experiences a cancer patient suffers is waiting without knowing: waiting for a diagnosis, waiting to get test results back, waiting to learn the outcome of treatment protocols.

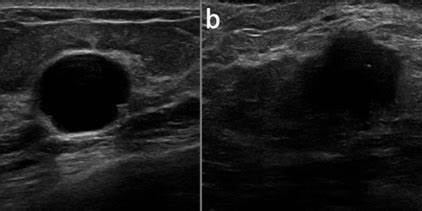

To address the problem, a researcher at the University of Alabama in Huntsville co-authored a paper in Nature Scientific Reports that studies the use of artificial intelligence and neuronal networks to significantly cut the time required for medical professionals to classify lesions in breast cancer ultrasound images. Accurate classification is pivotal for early diagnosis and treatment, and a deep-learning approach can effectively represent and utilize the digital content of images for more precise and rapid medical image analysis.

A lecturer of biological sciences at UAH, Dr. Keka Biswas examines human cancerous cells in a collaborative approach with biologists, mathematicians and statisticians in the field of mathematical biology.

Recent advances in AI and medical imaging have led to the widespread use of deep-learning technology, particularly in image processing and classification.

“Deep learning is a subfield of machine learning that employs neural network-based models to imitate the human brain’s capacity to analyze huge amounts of complicated data in areas such as image recognition,” Biswas said. “The applications include cancer subtype discovery, text classification, medical imaging, etc.

“With AI, you can actually use these advances during surgery to see what stage the cancer is in, and the imaging of it is a much faster turnaround time.”

Breast ultrasound imaging is useful for detecting and distinguishing benign masses from malignant masses, but imaging reporting and data system features are difficult for practitioners and radiologists and also time consuming. The study investigated the relationship of breast cancer imaging features and the need for rapid classification and analysis of precise medical images of breast lesions.

“While progress has been made in the diagnosis, prognosis and treatment of cancer patients, individualized and data-driven care remains a challenge,” Biswas said. “AI has been used to predict and automate many cancers and has emerged as a promising option for improving health care accuracy and patient outcomes.”

AI applications in oncology include risk assessment, early diagnosis, patient prognosis, estimation and treatment selection, based on deep-learning knowledge. Biswas’ study investigates the relationship between breast cancer imaging features and the roles of inter- and extra-lesional tissues and their impact on refining the performance of deep-learning classification. These advances are all predicated on the different ways benign and malignant tumors affect neighboring tissues, such as the pattern of growth and border irregularities, the penetration degree of the adjacent tissue and tissue-level changes.

“This is where deep learning comes in, looking into the deeper tissues and the outside tissue, and that can give us datasets to work with,” Biswas said.

Working to speed up hope

Researchers use AI and pre-training to obtain “neural network datasets,” collections of data used to train a neural network, a type of machine-learning algorithm inspired by the human brain. The training employs labeled examples that the network learns from to identify patterns and make predictions on new data.

A model is first trained on a dataset to learn broad features and patterns, which then serves as a “pre-trained” model that can be further fine-tuned on smaller, task-specific datasets to achieve better performance for a particular research goal – basically leveraging the knowledge gained from the large pre-training dataset to improve the efficiency of training on smaller, specialized datasets.

In 2023, Biswas took a serendipitous trip to a conference in South Africa, where she met Dr. Luminita Moraru, a professor at the University of Galati in Romania who has a similar career focus, while approaching the challenge using different tools.

“I met Dr. Moraru, who was from a department of chemistry at her university where she has a model and stimulation lab,” Biswas said. “But she didn’t have the biological or anatomical background for this kind of research.”

By teaming up, the two researchers found they could complement one another’s skillsets to delve much deeper into data challenges like these. “Then, about the same time, one of my friends got detected with breast cancer, and she had given up hope,” Biswas says. “It affected her tremendously. The thing that was scary was how long it was taking to go through all the pathological testing.”

That’s when events soon took an even more personal turn for the UAH educator.

“This summer (2024), I actually experienced this all for myself,” she said. “I had gone in for a routine exam and told the doctor what my symptoms were, and she said let’s run a biopsy. Two days later I got a call, you need to come in, and I knew something was wrong. ‘You have cancer,’ my doctor said. ‘Do you know which stage it is in?’ I asked. She couldn’t tell me a stage, even with the pathological tests that had been done. I have a child, a family. I needed to know which stage it is in, what the treatment is going to be. That took another three to four weeks for the results to come in. The delay was the major thing – do I need surgery? How long will it take?

“It all was a much longer process. My oncologist was very frustrated. I had a diagnosis, but none of the imaging was telling us what stage it was in. Whether it has metastasized or not. I needed to be aware of how soon I could recover.”

Beating the waiting game

Machine-learning algorithms use data such as investigations performed, scans conducted, patients’ medical history and other information to forecast or diagnose a cancerous condition from biopsies to help diagnose cancer stages.

“Initially, the model in the study was trained using more than one million well-annotated images,” Biswas said. “The pre-trained models can classify images into 1,000 object categories, because they learn meaningful feature representations for a wide range of images.”

Breast ultrasound imaging is most notably limited in its low sensitivity and specificity for small masses or solid tumors. In addition, for an accurate diagnosis, the required number of scans to cover the entire breast depends on breast size.

On average, two or three volumes are acquired for each breast per examination, so large volumes of breast ultrasound images have to be reviewed, representing a daunting amount of time and attention for accurate disease diagnosis.

“For efficiency in time and robust classification, we focused on discrete or localized areas inside and around the breast lesions as significant attributes,” Biswas said. “The novelty of this study lies in considering the features as extracted from the tissue inside the tumor and the role of inter- and extra-lesional tissues. Based on how these were affecting the neighboring cells, three criteria were addressed: pattern of growth and border irregularities; the degree of penetration and tissue level changes.

“We used specific algorithms for morphological classification. To get quicker results, we looked at the degree of machine learning that allows us to not only look on the surface, but also deeper inside. And this can be used in robotics surgery, reducing the surgery time significantly. With robotics and machine learning, you have a lot more images to look at, and it allows the surgeon to not only do the surgery, but also the diagnosis and retreatment, three things all at the same time.

“By reducing the number of false positives and negatives, DL networks provide highly accurate breast cancer detection. These methods can be used to diagnose different breast cancer subtypes at various stages by analyzing clinical and test data. To address the limited number of available annotated images, various DL networks pre-trained on large image databases are now available.”

Looking to the future, Biswas sees wide-ranging applications for these technologies.

“The system proposed in our study outperforms the existing breast cancer detection algorithms reported in the literature and will facilitate this challenging and time-consuming classification task,” she said. “In future work, the proposed system could be effective in assisting in the diagnosis of other cancerous lesions in colorectal, endometrial and melanoma tumors as well.

“Additionally, various AI-powered data generators could be used to overcome the shortage of datasets.”